If you have ever worked with embedded systems, then you have probably heard that dynamic memory allocation is evil. This opinion is usually presented as an indisputable fact, and the blame is placed mainly on the inner workings of the memory allocator, the piece of software responsible for managing dynamic memory allocations.

But should we really never use dynamic allocations in embedded systems? In this post I would like to propose that the answer is maybe. I will start by considering the pros and cons of dynamic memory allocations from an embedded systems point of view. Then I will show a simple implementation of a memory allocator in C so we can understand better how they work. At the end I will present my conclusions on this topic.

Why Dynamic Memory Allocation is Useful

First, I think we should start with the obvious question: why would we want to use dynamic allocation on our embedded code?

To better understand the advantages of using dynamic allocation, let’s consider a (simplified) real-life example.

A Simplified Example

Suppose you are developing a device that receives data from a client via some communication interface. The actual implementation of the communication interface does not matter, but you can imagine it might be a wired SPI or I2C based protocol, an RS-232 or RS-485 interface, or even a wireless Bluetooth, Wi-Fi or LoRA radio communication.

The important thing to know is that the data received from the client consists of a command byte followed by a sequence of bytes called the payload. Each command has a specific meaning, and the size of the payload is pre-determined by the command.

After receiving the command, the device will store and process the payload. Later, it will send the client a response, which also has a specific number of bytes for each command.

Let’s consider some actual commands, payload sizes and response sizes for our example:

| Command | Payload Size (bytes) | Response Size (bytes) |

|---|---|---|

| 0x00 – Get device information | 8 | 128 |

| 0x01 – Get device data 01 | 8 | 64 |

| 0x02 – Get device data 02 | 8 | 2048 |

| 0x03 – Set device parameter 01 | 128 | 4 |

| 0x04 – Set device parameter 02 | 4096 | 4 |

As we can see in the table above, the payload size of each command can vary a lot, from 8 bytes (commands 0x00, 0x01 and 0x02) to 4096 bytes (command 0x04). The response sizes have a wide range as well, from 4 bytes (commands 0x03 and 0x04) to 2048 bytes (command 0x02).

To receive the payloads and transmit the responses, we normally create reception (rx) and transmission (tx) buffers. The reception buffer is used to store the payload as it is received by the device, and then it is passed to a function that processes the payload. The transmit buffer is used to store the data bytes that will be sent in the response. The reception and transmission of bytes is usually done via interrupts or DMA.

Below is an incomplete, bare-bones implementation of the communication logic using interrupts, divided into the communication.c and command_processor.c files:

#include <stdint.h>

#include "command_processsor.h"

enum communication_state {

WAITING_COMMAND, //

RECEIVING_PAYLOAD, //

PROCESSING_PAYLOAD, //

SENDING_RESPONSE //

};

enum communication_commands {

GET_DEVICE_INFO = 0x00,

GET_DEVICE_DATA_01 = 0x01,

GET_DEVICE_DATA_02 = 0x02,

SET_DEVICE_PARAMETER_01 = 0x03,

SET_DEVICE_PARAMETER_02 = 0x04,

COMMAND_END

};

struct communication {

enum communication_state state;

uint8_t command;

uint8_t *rx_buf;

uint8_t *tx_buf;

size_t rx_index;

size_t tx_index;

size_t payload_len;

size_t response_len;

};

static struct communication comm = { //

.state = WAITING_COMMAND,

.command = 0x00,

//.rx_buf = Initialize reception buffer

//.tx_buf = Initialize transmission buffer

.rx_index = 0,

.tx_index = 0,

.payload_len = 0,

.response_len = 0};

// Checks if given command is valid

int is_command_valid(uint8_t command) {

return command < (uint8_t)COMMAND_END;

};

// Signals that payload is processed

void signal_payload_processed(void) {

if (comm.state == PROCESSING_PAYLOAD) {

// Go to 'SENDING_RESPONSE' state

comm.state = SENDING_RESPONSE;

// Enable 'send_byte' interrupt

enable_send_byte_interrupt();

}

}

// Returns payload length for given command

size_t get_payload_len(uint8_t command) {

size_t payload_len;

switch (command) {

case GET_DEVICE_INFO:

payload_len = 8u;

break;

case GET_DEVICE_DATA_01:

payload_len = 8u;

break;

case GET_DEVICE_DATA_02:

payload_len = 8u;

break;

case SET_DEVICE_PARAMETER_01:

payload_len = 128u;

break;

case SET_DEVICE_PARAMETER_02:

payload_len = 4096u;

break;

default:

payload_len = 0u;

break;

}

return payload_len;

}

// Returns expected response length for given command

size_t get_response_len(uint8_t command) {

size_t response_len;

switch (command) {

case GET_DEVICE_INFO:

response_len = 128u;

break;

case GET_DEVICE_DATA_01:

response_len = 64u;

break;

case GET_DEVICE_DATA_02:

response_len = 2048u;

break;

case SET_DEVICE_PARAMETER_01:

response_len = 4u;

break;

case SET_DEVICE_PARAMETER_02:

response_len = 4u;

break;

default:

response_len = 0u;

break;

}

return response_len;

}

// Interrupt raised when we receive a data byte

__interrupt void byte_received() {

switch (comm.state) {

case WAITING_COMMAND: {

// Save command byte and go to receiving payload state

// if command is valid

comm.command = RX_BYTE;

if (is_command_valid(comm.command)) {

comm.state = RECEIVING_PAYLOAD;

comm.rx_index = 0;

comm.payload_len = get_payload_len(comm.command);

}

} break;

case RECEIVING_PAYLOAD: {

comm.rx_buf[comm.rx_index++] = RX_BYTE;

if (comm.rx_index >= comm.payload_len) {

// Go to processing payload state

comm.state = PROCESSING_PAYLOAD;

// Disable data reception

disable_byte_received_interrupt();

comm.response_len = get_response_len(comm.command);

signal_to_process_payload(comm.command, //

comm.rx_buf, //

comm.payload_len, //

comm.tx_buf, //

comm.response_len);

}

} break;

default:

// Ignore received byte

// because we are not in a reception state

break;

}

}

// Interrupt raised when we are ready to send a response byte

__interrupt void ready_to_send_byte() {

switch (comm.state) {

case SENDING_RESPONSE: {

TX_BYTE = comm.tx_buf[comm.tx_index++];

if (comm.tx_index >= comm.response_len) {

// Return to waiting command state

comm.state = WAITING_COMMAND;

// Disable data transmission

disable_send_byte_interrupt();

// Re-enable data reception

enable_byte_received_interrupt();

}

} break;

default: {

// Disable interrupt, because we should not be here

disable_send_byte_interrupt();

}

}

}

#include <stdint.h>

typedef struct processor {

uint8_t *payload;

uint8_t *response_buf;

size_t payload_len;

size_t response_len;

uint8_t current_command;

} processor_t;

static processor_t command_processor;

// Signal to main loop or main thread that we received a payload

// and should process it

void signal_to_process_payload(uint8_t command,

uint8_t *payload,

size_t payload_len,

uint8_t *response_buf,

size_t response_len) {

// Store command, payload and response buffer context

command_processor.current_command = command;

command_processor.payload = payload;

command_processor.payload_len = payload_len;

command_processor.response_buf = response_buf;

command_processor.response_len = response_len;

/* Signal main loop/thread to wake up and call

* 'process_payload' function

*/

// TODO

}

void process_payload() {

switch (command_processor.current_command) {

/* 1. Select command */

// TODO

/* 2. Process payload stored in 'payload' pointer */

// TODO

/* 3. Write response in 'response_buf' pointer */

// TODO

/* 4. Signal to go to 'SENDING_RESPONSE' state

by signaling that payload is processed. */

signal_payload_processed();

}

}

Using Static Memory Allocation

As you can see in the highlighted lines of the code in the previous section, we did not initialize the receive and transmit buffers in our code. One way to create these buffers is by using static memory allocation. That is, we define a global array of bytes for each of the buffers, and use these arrays to store the data:

#include <stdint.h>

#define RX_BUFFER_BYTE_LEN 4096

#define TX_BUFFER_BYTE_LEN 2048

static uint8_t rx_buf[RX_BUFFER_BYTE_LEN];

static uint8_t tx_buf[TX_BUFFER_BYTE_LEN];

static struct communication comm = {

.state = WAITING_COMMAND,

.command = 0x00,

.rx_buf = rx_buf,

.tx_buf = tx_buf,

.rx_index = 0,

.tx_index = 0,

.payload_len = 0,

.response_len = 0

};

In the code above, we used a length of 4096 bytes for the receive buffer, while the transmit buffer was given a length of 2048 bytes, giving a total of 6144 bytes set aside for the buffer. This is because the size of static memory allocations is determined at compile time, so we have to consider the worst-case scenario for both the payload and the response to determine the array lengths and make sure we don’t run out of memory when receiving and responding to commands.

| Largest Payload Size (bytes) | Largest Response Size (bytes) |

|---|---|

| 4096 (command 0x04) | 2048 (command 0x02) |

The problem with this approach is that in practice our device might never face the worst-case scenario, or even if it does, it might happen very rarely. However, since the size of the memory that is statically allocated cannot be changed at runtime, it will just sit there mostly unused for the whole execution of our program, while it could have been used by other tasks that our device executes.

This can become a huge waste when the worst-case scenario requires much more memory than the other cases. In our example, if we ignore command 0x04, then our worst-case for the payload falls to only 128 bytes, a 32-fold reduction in the required rx buffer size.

Using Dynamic Memory Allocation

At this point, the only way to improve the efficiency of our memory usage is to use dynamic memory allocation. The main difference between dynamic allocation and static allocation is that in dynamic allocations, memory is allocated during the program execution and can be “returned” once it is no longer in use, allowing other tasks to use it.

There are many ways to implement dynamic allocations, but it usually boils down to separating a section of our device’s memory for dynamic use, and then creating a memory allocator, which is used to manage the memory. The section of memory used by the memory allocator is often called free store, heap, heap memory, heap segment, or dynamic memory. From here on, we will call it heap or heap memory.

The memory allocator must expose at least two functions, usually called malloc (memory allocate) and free (free memory), which are used to allocate and deallocate memory dynamically, as shown in the code below:

// Allocates a block of memory of size equal // or greater than 'size_bytes'. // Returns pointer to start of memory block, // or NULL if it was not possible to allocate // the memory block. void *malloc(size_t size_bytes); // Frees block of memory pointed by 'memory_ptr'. void free(void *memory_ptr);

Returning to our example, instead of pre-allocating the memory statically, we could allocate the memory as the command is received using malloc. We request only the number of bytes required for either the payload or for the response. Then, once we have used the buffer and the memory is no longer needed, we return it by calling free.

In the code below, we have replaced the use of static allocations with dynamic allocations, highlighting the calls to malloc and free.

#include <stdint.h>

#include <stddef.h>

#include "command_processor.h"

enum communication_state {

WAITING_COMMAND, //

RECEIVING_PAYLOAD, //

PROCESSING_PAYLOAD, //

SENDING_RESPONSE //

};

enum communication_commands {

GET_DEVICE_INFO = 0x00,

GET_DEVICE_DATA_01 = 0x01,

GET_DEVICE_DATA_02 = 0x02,

SET_DEVICE_PARAMETER_01 = 0x03,

SET_DEVICE_PARAMETER_02 = 0x04,

COMMAND_END

};

struct communication {

enum communication_state state;

uint8_t command;

uint8_t *rx_buf;

uint8_t *tx_buf;

size_t rx_index;

size_t tx_index;

size_t payload_len;

size_t response_len;

};

static struct communication comm = { //

.state = WAITING_COMMAND,

.command = 0x00,

.rx_buf = NULL, // initialize as null

.tx_buf = NULL, // initialize as null

.rx_index = 0,

.tx_index = 0,

.payload_len = 0,

.response_len = 0};

// Checks if given command is valid

int is_command_valid(uint8_t command) {

return command < (uint8_t)COMMAND_END;

};

// Signals that payload is processed

void signal_payload_processed(void) {

if (comm.state == PROCESSING_PAYLOAD) {

// Go to 'SENDING_RESPONSE' state

comm.state = SENDING_RESPONSE;

// Enable 'send_byte' interrupt

enable_send_byte_interrupt();

}

}

// Returns payload length for given command

size_t get_payload_len(uint8_t command) {

size_t payload_len;

switch (command) {

case GET_DEVICE_INFO:

payload_len = 8u;

break;

case GET_DEVICE_DATA_01:

payload_len = 8u;

break;

case GET_DEVICE_DATA_02:

payload_len = 8u;

break;

case SET_DEVICE_PARAMETER_01:

payload_len = 128u;

break;

case SET_DEVICE_PARAMETER_02:

payload_len = 4096u;

break;

default:

payload_len = 0u;

break;

}

return payload_len;

}

// Returns expected response length for given command

size_t get_response_len(uint8_t command) {

size_t response_len;

switch (command) {

case GET_DEVICE_INFO:

response_len = 128u;

break;

case GET_DEVICE_DATA_01:

response_len = 64u;

break;

case GET_DEVICE_DATA_02:

response_len = 2048u;

break;

case SET_DEVICE_PARAMETER_01:

response_len = 4u;

break;

case SET_DEVICE_PARAMETER_02:

response_len = 4u;

break;

default:

response_len = 0u;

break;

}

return response_len;

}

// Interrupt raised when we receive a data byte

__interrupt void byte_received() {

switch (comm.state) {

case WAITING_COMMAND: {

// Save command byte and go to receiving payload state

// if command is valid

comm.command = RX_BYTE;

if (is_command_valid(comm.command)) {

comm.state = RECEIVING_PAYLOAD;

comm.rx_index = 0;

comm.payload_len = get_payload_len(comm.command);

// Allocate required memory for payload

comm.rx_buf = (uint8_t *)malloc(comm.payload_len);

}

} break;

case RECEIVING_PAYLOAD: {

comm.rx_buf[comm.rx_index++] = RX_BYTE;

if (comm.rx_index >= comm.payload_len) {

// Go to processing payload state

comm.state = PROCESSING_PAYLOAD;

// Disable data reception

disable_byte_received_interrupt();

comm.response_len = get_response_len(comm.command);

// Allocate memory for tx_buf

comm.tx_buf = (uint8_t *)malloc(comm.response_len);

// Signal to process payload

signal_to_process_payload(comm.command, //

comm.rx_buf, //

comm.payload_len, //

comm.tx_buf, //

comm.response_len);

}

} break;

default:

// Ignore received byte

// because we are not in a reception state

break;

}

}

// Interrupt raised when we are ready to send a response byte

__interrupt void ready_to_send_byte() {

switch (comm.state) {

case SENDING_RESPONSE: {

TX_BYTE = comm.tx_buf[comm.tx_index++];

if (comm.tx_index >= comm.response_len) {

// Return to waiting command state

comm.state = WAITING_COMMAND;

// Free tx_buf

free(tx_buf);

// Disable data transmission

disable_send_byte_interrupt();

// Re-enable data reception

enable_byte_received_interrupt();

}

} break;

default: {

// Disable interrupt, because we should not be here

disable_send_byte_interrupt();

}

}

}

#include <stdint.h>

typedef struct processor {

uint8_t *payload;

uint8_t *response_buf;

size_t payload_len;

size_t response_len;

uint8_t current_command;

} processor_t;

static processor_t command_processor;

// Signal to main loop or main thread that we received a payload

// and should process it

void signal_to_process_payload(uint8_t command,

uint8_t *payload,

size_t payload_len,

uint8_t *response_buf,

size_t response_len) {

// Store command, payload and response buffer context

command_processor.current_command = command;

command_processor.payload = payload;

command_processor.payload_len = payload_len;

command_processor.response_buf = response_buf;

command_processor.response_len = response_len;

/* Signal main loop/thread to wake up and call

* 'process_payload' function

*/

// TODO

}

void process_payload() {

switch (command_processor.current_command) {

/* 1. Select command */

// TODO

/* 2. Process payload stored in 'payload' pointer */

// TODO

/* 3. Free payload memory */

free(command_processor.payload);

/* 4. Write response in 'response_buf' pointer */

// TODO

/* 5. Signal to go to 'SENDING_RESPONSE' state

by signaling that payload is processed. */

signal_payload_processed();

}

}

Now, with our new implementation of the program, we only use the number of bytes that are required to store the payload and the response for the specific command we have received. This means that our worst-case scenario does not have to consider the worst-case payload and response individually, but the worst-case command, since memory will be allocated as the command is received.

We can see that the command that requires the most memory is command 0x04, with 4096 bytes for the payload and 4 bytes for the response, totaling 5000 bytes. This is 1144 fewer bytes or 18 % less than the similar static implementation using 2 buffers.

Another benefit of the dynamic implementation is that once we have finished using the buffers, we free them to allow other parts of our program to use the memory, eliminating waste.

Isn’t it neat?

Well, there are actually some ugly wrinkles in this story. But first, there is something very important we must point out.

A Very Important Note on Dynamic Memory Allocations

There is one crucial thing that might not have become clear from the previous discussion. When we are talking about bare-metal embedded systems, or systems that are running a regular RTOS, under the rugs dynamic memory allocation is actually static allocation.

What?

Yes, you heard that right. In the end, the heap memory used in dynamic allocations is just memory. What makes dynamic allocations “dynamic” is the memory allocator, and the heap memory it allocates from is actually statically allocated. When using dynamic allocations, we still need to pre-allocate a portion of our RAM for dynamic use, and the memory allocator just provides the interface to request blocks of that memory and to “return” that memory once we don’t need it anymore.

We can pre-allocate the heap memory by simply defining a big array and passing it to our memory allocator, or by separating a section of memory for dynamic use in our linker script, but the result is the same: dynamic memory allocation does not provide extra memory in no way, shape or form, but only gives us more flexibility on how we use memory during the execution of our program. This flexibility can mean that we save memory compared to a similar static implementation, but we can always tweak our static implementation to use the same amount of memory.

So, looking back at our previous example, when we used dynamic allocation for the rx and tx buffers, under the hood we would still have to make sure we pre-allocated at least 5000 bytes for our heap to handle the worst-case command scenario. This is less than the 6144 bytes that we used for the static implementation we presented, but this is only because in the static implementation we used separate buffers for the rx and tx buffers.

Imagine that instead we used a single buffer to first store the received payload, and then continued using it to store the response that will be transmitted. In this case, we could also get away with a buffer of 5000 bytes, since that’s the worst-case for the combined payload + response sizes. Of course, our code would get a bit more complex, and we would have to keep track of buffer indexes to make sure the tx and rx buffers don’t overrun each other, but that is completely doable.

What I’m really trying to say is that dynamic memory allocation is at its core a kind of “managed” static memory. Just like the memory used in static allocations, it is finite and pre-allocated. The only difference is that it has an interface that streamlines its use, allowing memory to be more easily shared between different tasks.

If the memory used in dynamic allocations is actually pre-allocated and has a maximum size, this means we can run out of it. But then what would happen if we tried to request more memory than what is available?

Why Memory Allocators Suck

Now that we have seen how dynamic allocation can help making our code more flexible, let’s consider some of the reasons for avoiding its in embedded systems.

Memory allocators can fail

This is a point we touched in the previous section. Dynamic allocation has a limit, and if there is not enough memory left, an allocation request made to the memory allocator will fail. What this usually means is that instead of returning a pointer to a block of the heap, the memory allocator will return a null pointer.

How do we deal with this failure? Well, that’s up to you, the designer of the system.

Depending on the task that we are executing, we may simply ignore the failure, and try to allocate memory again later, hoping enough memory was released by another task in the meantime.

In our previous example, a failed allocation would mean that we either wouldn’t be able to receive a command, or we would receive it, but wouldn’t be able to respond to it in a timely manner, if at all. This might be acceptable for some devices, as long as the device eventually resumed working (though I believe most people would prefer a system that is guaranteed to receive to commands and respond in a predictable way).

An airplane control system simply cannot stop working because it could not allocate memory. A medical device that needs to constantly monitor a patient should never freeze because some task has used all the heap memory. For such safety-critical devices, dynamic allocation is strongly discouraged, and many of the standards related to such system completely prohibit its use or place a lot of restrictions on how it can be used.

Memory allocators can degrade over time

There is even worse news regarding memory allocation failure. Sometimes, an allocation request will not succeed even if there is enough free heap memory. This is due to a phenomenon known as memory fragmentation.

To understand fragmentation, let’s consider the following scenario.

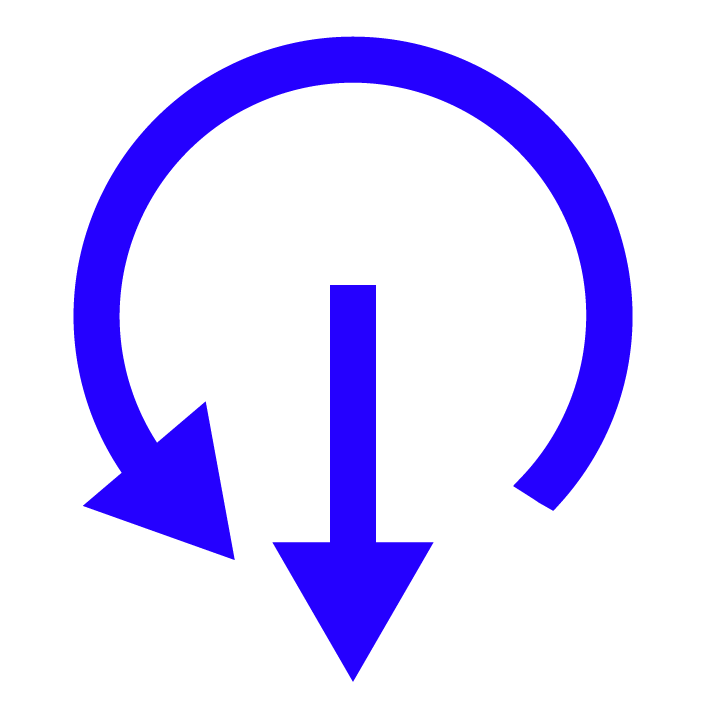

We start with a completely free section of heap memory with total size of 3 kiB (3072 bytes).

Then, we allocate in sequence 3 blocks of memory, each with 1 kiB (1204 bytes) in size, completely using the our heap memory.

After that, we free the first and the last block, leaving only the middle block allocated.

As we can clearly see in the picture above, after freeing block_1 and block_3 we have 2 kiB (2048 bytes) of memory left, as expected. This memory however is not contained in a single chunk but fragmented (divided) in two blocks of 1 kiB (1024 bytes) each.

If we now try to allocate more than 1024 bytes at once, the allocation will fail, because it won’t find a contiguous block of memory with that many bytes, even though technically there is more than 1024 bytes free. We can’t make full use of our heap memory because it is fragmented.

Fragmentation is a natural process that tends to increase the longer we use dynamic allocation. It can happen faster if we make many allocations and deallocations and will get worse as we reach the limit of our heap memory. Even if we try to minimize it, in most practical cases, fragmentation is unavoidable.

There are techniques used by memory allocators to reverse fragmentation, and some can work really well. But they many make use of extra algorithms and will consume processing cycles from our microprocessor, increasing the latency of our system.

Which brings us to our next point.

Memory allocators can slow down our system

By its very nature, dynamic allocations will make our code slower when compared to using static allocations.

First, when we call malloc, the memory allocator has to find a suitable block of memory to fulfill all request. Depending on the implementation of the allocator, the time taken by this operation might depend on the number of free blocks in heap memory, taking or even

operations on average, where n is the number of free blocks.

Some implementations of allocators make this search and allocate operation have a average time-complexity (constant time), but even in the best of cases it will take more time than simply directly accessing pre-allocated memory.

Then there is the free function. There are also ways to make this function have an average time complexity, but most allocators include some algorithm to merge the released block to adjacent blocks if possible. This involves extra computation that will slow down our program. Moreover, while the average time complexity is constant, the worst-case time complexity of many implementations can be

, or linear time, for both the

free and malloc functions.

In the end, we should avoid calling malloc and free in interrupts or high priority tasks. We did exactly that in our real-life example from the previous section. This means that to ensure the responsiveness of our device, we would have to refactor that code and find a way to call these functions outside of the interrupt, which may not always be possible.

Memory allocators can cause bugs

This is a big one. There are quite a few surveys that indicate that the improper use of dynamic allocation is one of the major causes of bugs.

There are actually multiple ways to bugs can crop up in our code by incorrectly using dynamic allocation.

Memory leaks

We request a block of memory by calling malloc and return it to the memory pool by calling free. But what if we forget to call free? Then we have what is called a memory leak.

Like the name implies, a memory leak drains our heap memory. Any block of memory that is unreleased cannot be used by the memory allocator, so it will just sit idly, wasted, unable to be re-allocated to another task.

A memory leak acts effectively as a reduction in our total heap memory. The larger the block of memory that is not freed, the greater this reduction. The only way to make the leak go away is to reset the system and hope that our program takes a different execution path that causes the memory to be released.

Double frees

Double frees are kind of the inverse of memory leaks. They happen when we call free on a block of memory that is already released. That is, if memory leaks are caused by an underuse of the free function, double frees result from overusing it.

Some memory allocators are smart enough to recognize a double free, preventing bugs. Many implementations, however, are not that smart, and a double free may corrupt the memory allocator, causing undefined behavior.

Use after free

A use after free bug happens when we try to access a block of heap memory after we released it using the free function. The pointers to memory that was already released are known as dangling pointers, and they are the source of nasty bugs.

A released block of memory might still hold the old information we stored there; in which case the program will appear to run normally. But since the block is free, it may be re-allocated, and the information there can be overwritten by the new task that now rightfully holds that memory. This will cause the old data to be corrupted, leading to unexpected behavior. The inverse is also true, and the old task can corrupt the data accessed by the new task.

Even worse, the old task might overwrite some memory allocator metadata stored in the block of heap memory. This will cause the memory allocator to stop working correctly and can even lead to a complete program crash.

Null dereference

A null dereference happens when we try to access the object pointed by a null pointer, leading to unpredictable behavior. While there are many sources of null dereference bugs, the use of dynamic memory allocation is one of the major contributors.

We have to remember that memory allocations can fail, and when that happens, the malloc function returns a null pointer. If we do not check the this return value, we may incorrectly believe that the returned pointer is valid and start using it, which will lead to a null dereference, and possibly a system crash.

Indeterminate values

When we allocate memory by calling malloc, there is not guarantee as to what values will be already contained in the block of memory. This is something that must be taken into consideration, otherwise we may get undesirable behavior by getting variables initialized to random values.

For many memory allocators, there is a variant of malloc called calloc (clear memory allocate), which initializes the allocated memory bytes to zero. However, this has causes an execution overhead that might not always be acceptable.

Memory allocators can waste memory

As we have discussed before, dynamic allocation does not give us extra memory, but only provides more flexibility in using the memory we have available. However, the truth is that memory allocators actually waste our available memory, and in more ways than one.

The first way that memory allocators waste memory is by introducing an unavoidable memory overhead. To manage dynamic allocation, memory allocators have to keep some metadata that allows them to know if there are free blocks of memory, where they are located and how big they are. This metadata is usually stored for each chunk of heap memory, free or allocated, causing an overhead that is proportional to the number of blocks we have.

The size of the metadata is usually around 1 to 4 words (4 to 16 bytes for 32-bit systems) per block of memory. This doesn’t sound like much, but if your system is prone to use small blocks of memory (around the size of the metadata itself), this overhead can eventually consume 50 % or more of the heap.

The other way memory allocators can waste memory is by giving more of it than you asked for. Some implementations of memory allocators use a bin algorithm to sort the sizes of free memory blocks. When an allocation is requested, the memory allocator will search for a free block in the smallest bin that can hold the number of requested bytes. This means that we will usually get some extra bytes that will remain unused, wasting memory.

Memory allocators can be unnecessary

The final drawback of memory allocators is that they are often used in situations where they bring not real benefit to our program. This is not something that we can blame on the allocators themselves, but on our own poor understanding of how dynamic allocation works.

To illustrate this, let’s think back on the client-device example we presented in the previous section. With the information we have now, we can clearly list some drawbacks of using dynamic memory allocation in that application:

- Possibility of not receiving a command or not responding to it if memory allocation fails.

- Introduction of non-determinism and reduced memory caused by fragmentation.

- Increased latency due to

mallocandfreefunction calls. - Possible introduction of bugs such as use after free, double free, memory leaks, etc.

- Probably higher memory requirement when compared with static allocation due to memory overheads and memory waste of allocations.

Now, what about the benefits of using dynamic allocation? We already talked about having a more flexible memory usage, but what does that actually mean?

It turns out that for embedded systems running bare-metal or simple RTOs on single core processors, this flexibility usually means nothing at all.

Dynamic allocation can be very useful when we talk general-purpose computers. In this case, its use allows us to create software that is more flexible, and that also plays “nice” by releasing unused memory to other applications that run concurrently or in parallel. The memory overhead is usually not a problem, since there is a lot of memory available in these devices – which also means allocation failures rarely happen. The execution speed penalty is also not too bad, since the processors tend to be very fast. Bugs are still an issue, but this can be overcome with the use of a garbage collector or other memory management techniques.

But for embedded systems, none of the above is true most of the time. There is only one application running, so there’s no one else to share memory with. We do care about the memory overhead and the increased execution time, so we can’t ignore that. And using a garbage collector is out of question for the majority of systems.

So, for our original example, the use of dynamic allocation seems to bring no gain, while having a lot of drawbacks. Which means we probably shouldn’t use it in the first place.

A Simple Memory Allocator

After looking at the benefits and the many negatives of dynamic memory allocations, it would be useful to look at the implementation of a memory allocator so we can have a better understanding of how this piece of software works.

There are many different allocator implementations, and some are very well known and widely used. Here are some of note:

- Doug Lea’s memory allocator, also known as dlmalloc. A general-purpose allocator. Used in the GNU C library and some versions of Linux.

- mimalloc, a general-purpose allocator developed by Microsoft.

- jemalloc, a general-purpose allocator that focuses on fragmentation avoidance. Used as the allocator in FreeBSD libc.

- rpmalloc, a general-purpose, performant memory allocator created by Mattias Jansson.

- tcmalloc, a general-purpose, multi-threaded allocator created and used by Google. Developed in C++.

- Hoard, a general-purpose, memory efficient allocator developed by Emery Berger.

- SHMALL, a small memory allocator developed by Chris Careaga for educational purposes.

In this section, I will present a simple allocator implementation. While this won’t be a production grade allocator, it will be completely functional. Other characteristics of this simple implementation are:

- Includes

mallocandfreefunctions with standard parameters and return values. - Very short implementation, with about 100 lines of code.

- Uses first-fit algorithm when allocating blocks of memory, splitting the memory block if it is larger than required, reducing memory waste.

- Has memory alignment logic that forces the start address of allocated blocks of memory to be a multiple of a certain power of 2. This can be useful to speed up accesses to the allocated memory.

- Coalesces freed blocks of memory to avoid fragmentation (this is good).

- Is not thread safe (this is not good).

mallocfunction has an average and worst-case time complexity ofwhere

is the number of free blocks (this is bad).

freefunction also has an average and worst-case time complexity ofwhere

is the number of free blocks (also bad).

Initialization

Before using our memory allocator, we have to initialize it. We do this by calling the allocator_init function, whose signature is as follows:

void allocator_init(void *memory_addr, size_t memory_size, uint8_t align_power);

As we can see, allocator_init takes three arguments:

memory_addr: A pointer to the start of the heap memory. This can be just an array on the stack, or the actual start of the .heap segment of our RAM. We expect this address to already be aligned.memory_size: The number of bytes in our heap.align_power: A non-negative number that is used to calculate the memory alignment. Since the alignment must be a power of two, this number is actually the exponent used to calculate the alignment, so. For example, if

align_poweris 3, then the alignment size will bebytes.

Before showing the implementation of the init function, we have to understand that our allocator is implemented using just two structs:

block_header: Represents the metadata of a free block of memory. Contains 2 members,next, which points to the header of the next free block of memory; andsize, which represents the size of the block of memory, excluding the header.

The header is placed in the heap memory, at the very start of the block to which it refers.

typedef struct block_header {

struct block_header *next;

size_t size;

} block_header_t;

allocator: Represents the memory allocator and its state.

Contains a pointer to the start of the heap memory, the total memory size in bytes, the alignment size, the aligned header size, a linked list of the current free blocks of memory, and a boolean indicating if the allocator has been initialized.

typedef struct allocator {

void *memory;

size_t memory_size;

size_t alignment_size;

size_t aligned_header_size;

block_header_t *free_blocks;

bool is_init;

} allocator_t;

We assume there is a single allocator in our program, and define a static variable of type allocator_t to represent it. When allocator_init is called, we will just initialize the members of this variable:

/** Memory allocator static global variable. */

static allocator_t allocator = {.is_init = false};

void allocator_init(void *memory_addr, size_t memory_size,

uint8_t align_power) {

// Initialize alignment size (ATTENTION: this must be done first)

allocator.alignment_size = (size_t)(1 << align_power);

// Initialize memory

allocator.memory = memory_addr;

allocator.memory_size = align_size_down(memory_size);

// Calculate aligned block header size

allocator.aligned_header_size = align_size_up(sizeof(block_header_t));

// Initialize free blocks list

allocator.free_blocks = (block_header_t *)memory_addr;

allocator.free_blocks->size = allocator.memory_size - allocator.aligned_header_size;

allocator.free_blocks->next = NULL;

// Signal that allocator is initialized

allocator.is_init = true;

}

First, we calculate the alignment size based on the alignment power. It is crucial that we calculate this value before doing anything, since its value is used by the alignment functions we call later.

Then, we calculate the aligned header size and save the memory address. After that, we initialize the free_blocks variable so that it points to the initial heap memory address. We set its size to be equal to the total memory size minus the aligned size of the block header, and set the next pointer to NULL, since there are no other blocks in our memory. Finally, we set the boolean is_init to true.

It is important to point out that for alignment, we use two functions: align_size_up and align_size_down. Both take a single argument, representing a memory size in bytes, and return a size corresponding to the aligned size. However, align_size_up aligns the size by rounding up to the nearest multiple of the alignment size, while align_size_down rounds down.

We use align_size_down to align the total memory size, because if we used align_size_up, we could give the allocator more memory than it is allowed to use. By using align_size_down we may lose a few bytes of memory, but we avoid memory overrun bugs.

To calculate the aligned header size, on the other hand, we use align_size_up, since we have to make sure that the aligned size is big enough to contain the block header. As a rule, we will use align_size_up to calculate the aligned sizes of data stored in our heap.

The implementations of the memory alignment functions are very short, and use bitwise arithmetic:

static size_t align_size_up(size_t size) {

return (size + (allocator.alignment_size - 1)) &

~(allocator.alignment_size - 1);

}

static size_t align_size_down(size_t size) {

return (size & ~(allocator.alignment_size - 1));

}

Please note that the implementations above only work if the alignment size is a power of 2. Since the allocator_init function ensures that is the case, we don’t have to worry about this restriction.

Allocating Memory

After initializing the allocator, we are able to allocate memory. We do so by calling the allocator_malloc function:

void *allocator_malloc(size_t size) {

if (!allocator.is_init || size == 0 || allocator.free_blocks == NULL) {

return NULL;

}

size_t aligned_size = align_size_up(size);

block_header_t *previous_block = find_free_block(aligned_size);

block_header_t *found_block;

if (previous_block) {

found_block = previous_block->next; // get selected block

} else if (allocator.free_blocks &&

allocator.free_blocks->size >= aligned_size) {

found_block = allocator.free_blocks; // the first block is big enough

} else {

return NULL; // no suitable block found

}

// Split the block if necessary

size_t remaining = found_block->size - aligned_size;

// Check if the remaining bytes can hold a block header plus at least one byte

if (remaining > align_size_up(allocator.aligned_header_size + 1)) {

block_header_t *new_block =

(block_header_t *)(((uint8_t *)found_block + aligned_size) +

allocator.aligned_header_size);

new_block->size = remaining - allocator.aligned_header_size;

new_block->next = found_block->next;

found_block->size = aligned_size;

found_block->next = new_block;

}

if (previous_block) {

// Adjust the previous block to point to the next free block

previous_block->next = found_block->next;

} else {

// Update the free block list head

allocator.free_blocks = found_block->next;

}

return ((uint8_t *)found_block + allocator.aligned_header_size);

}

This function accepts one argument representing the size of the memory in bytes to allocate. It returns a pointer to the allocated memory block if the allocation was successful, or NULL if the allocation failed.

The steps performed by the function are explained below.

1. Initial Checks

The function first checks whether the allocator is initialized, whether the requested memory size is non-zero, and whether there are any free blocks available. If any of these conditions are not met, the function immediately returns NULL, indicating that the allocation failed.

if (!allocator.is_init || size == 0 || allocator.free_blocks == NULL) {

return NULL;

}

2. Align the Requested Size

The requested size is aligned up to the nearest multiple of the allocator’s alignment size using the align_size_up function.

size_t aligned_size = align_size_up(size);

3. Find a Suitable Free Block

The function calls find_free_block, with aligned_size as the argument, to search through the list of free blocks and find a block that is large enough to satisfy the request. The function returns a pointer to the previous free block in the list, or NULL if no suitable block is found.

block_header_t *previous_block = find_free_block(aligned_size);

The function find_free_block returns a pointer to the free block before the suitable block, because otherwise we would not be able to remove the found block from the linked list of free blocks. If our block headers also kept a reference to the previous block, this would not be necessary.

find_free_block is implemented as follows:

static block_header_t *find_free_block(size_t size) {

block_header_t *current = allocator.free_blocks;

block_header_t *previous = NULL;

while (current) {

if (current->size >= size) {

return previous; // return the previous block to aid in block splitting

}

previous = current;

current = current->next;

}

return NULL;

}

The implementation is very simple, and the function just searches sequentially the list of free blocks until it finds a block large enough to hold the requested number of bytes.

4. Check Found Block

The function than checks if a suitable block was found, and sets the variable found_block to the block header. Otherwise, the function returns NULL if no block large enough was found.

block_header_t *previous_block = find_free_block(aligned_size);

block_header_t *found_block;

if (previous_block) {

found_block = previous_block->next; // get selected block

} else if (allocator.free_blocks &&

allocator.free_blocks->size >= aligned_size) {

found_block = allocator.free_blocks; // the first block is big enough

} else {

return NULL; // no suitable block found

}

5. Split the Block (if necessary)

If the found block is larger than needed, the block is split into two parts: the first part is used to satisfy the allocation request and the remaining part becomes a new free block. If the block was split, the new free block is placed after the original found block in the free blocks list.

// Split the block if necessary

size_t remaining = found_block->size - aligned_size;

// Check if the remaining bytes can hold a block header plus at least one byte

if (remaining > align_size_up(allocator.aligned_header_size + 1)) {

block_header_t *new_block =

(block_header_t *)(((uint8_t *)found_block + aligned_size) +

allocator.aligned_header_size);

new_block->size = remaining - allocator.aligned_header_size;

new_block->next = found_block->next;

found_block->size = aligned_size;

found_block->next = new_block;

}

6. Allocate Memory Block

The function then removes the found block from the free blocks list, signaling that the block has been allocated.

It does that by rearranging the list so the previous block points to the block after the found block, or if the found block is the first block in the list (the head of the list), by moving the head to the next block.

if (previous_block) {

// Adjust the previous block to point to the next free block

previous_block->next = found_block->next;

} else {

// Update the free block list head

allocator.free_blocks = found_block->next;

}

7. Return Pointer to Start of Allocated Block

Finally, the function returns a pointer to the start of the allocated memory block, skipping the block header. If we simply returned the address of the block starting at the beginning of the header, the caller of the function would end up overwriting the header, corrupting our allocator.

Note that we do not use the size of the header itself to calculate the memory offset, but the aligned_header_size we calculated in the allocator_init function. This ensures that the start address of the pointer we returned is aligned.

return ((uint8_t *)found_block + allocator.aligned_header_size);

Freeing Memory

The allocator_free function is responsible for returning a previously allocated block of memory back to free memory list, making it available for future allocations.

The function receives one argument named ptr, the pointer to the start of the allocated memory block. It has no return value.

void allocator_free(void *ptr) {

if (!allocator.is_init || !ptr) {

return;

}

// Get block header of memory location given by 'ptr'

block_header_t *block =

(block_header_t *)((uint8_t *)ptr - allocator.aligned_header_size);

block_header_t *next_free = allocator.free_blocks;

block_header_t *previous_free = NULL;

// Place the block back in sorted order to make merging possible

while (next_free && next_free < block) {

previous_free = next_free;

next_free = next_free->next;

}

// Merge with the next free block if possible

uint8_t *next_block =

((uint8_t *)block + allocator.aligned_header_size) + block->size;

if (next_block == ((uint8_t *)next_free)) {

block->size += next_free->size + allocator.aligned_header_size;

block->next = next_free->next;

} else {

block->next = next_free; // insert block before next free block

}

// Merge with the previous free block if possible

if (previous_free &&

(((uint8_t *)previous_free + allocator.aligned_header_size) +

previous_free->size) == ((uint8_t *)block)) {

previous_free->size += block->size + allocator.aligned_header_size;

previous_free->next = block->next;

} else if (previous_free) {

previous_free->next = block; // insert block after previous free block

} else {

allocator.free_blocks = block; // there was no previous free block, so freed

// block is the new head of the free list

}

}

1. Initial Checks

The function checks whether the given ptr is NULL or if the allocator is not initialized. If either condition is true, the function simply returns, as there is nothing to free or the allocator is not ready to be used.

if (!allocator.is_init || !ptr) {

return;

}

// Get block header of memory location given by 'ptr'

block_header_t *block =

(block_header_t *)((uint8_t *)ptr - allocator.aligned_header_size);

block_header_t *next_free = allocator.free_blocks;

block_header_t *previous_free = NULL;

// Place the block back in sorted order to make merging possible

while (next_free && next_free < block) {

previous_free = next_free;

next_free = next_free->next;

}

// Merge with the next free block if possible

uint8_t *next_block =

((uint8_t *)block + allocator.aligned_header_size) + block->size;

if (next_block == ((uint8_t *)next_free)) {

block->size += next_free->size + allocator.aligned_header_size;

block->next = next_free->next;

} else {

block->next = next_free; // insert block before next free block

}

// Merge with the previous free block if possible

if (previous_free &&

(((uint8_t *)previous_free + allocator.aligned_header_size) +

previous_free->size) == ((uint8_t *)block)) {

previous_free->size += block->size + allocator.aligned_header_size;

previous_free->next = block->next;

} else if (previous_free) {

previous_free->next = block; // insert block after previous free block

} else {

allocator.free_blocks = block; // there was no previous free block, so freed

// block is the new head of the free list

}

}

2. Calculate the Block Header Address

The function calculates the address of the block header associated with the memory block by subtracting the aligned header size from the ptr address. This is because the actual data pointer is located just after the block header in the memory.

// Get block header of memory location given by 'ptr'

block_header_t *block =

(block_header_t *)((uint8_t *)ptr - allocator.aligned_header_size);

block_header_t *next_free = allocator.free_blocks;

block_header_t *previous_free = NULL;

3. Find the Insertion Point in the Free List

The function traverses the list of free blocks to find the appropriate place to insert the block being freed. For this allocator to work correctly, free blocks must be inserted in the list in the exact order they are located in the memory, from lower addresses to higher addresses.

The function also keeps track of the previous and next free blocks relative to where the new block should be inserted.

// Place the block back in sorted order to make merging possible

while (next_free && next_free < block) {

previous_free = next_free;

next_free = next_free->next;

}

4. Merge with Next Free Block or Insert into Free List

If the block being freed is located just before an existing free block (either before or after in memory), the function merges these blocks into a single larger free block. This helps in reducing fragmentation and making larger blocks available for future allocations.

If merging with the next free block is not possible, the function just inserts the new freed block before the next free block in the list.

// Merge with the next free block if possible

uint8_t *next_block =

((uint8_t *)block + allocator.aligned_header_size) + block->size;

if (next_block == ((uint8_t *)next_free)) {

block->size += next_free->size + allocator.aligned_header_size;

block->next = next_free->next;

} else {

block->next = next_free; // insert block before next free block

}

5. Merge with Previous Free Block or Insert into Free List

In the final step, the function tries to merge the block being freed with the previous free block in the list. If this is not possible, then the block is inserted in the list after the previous free block or placed in the head of the list if there is no previous free block.

// Merge with the previous free block if possible

if (previous_free &&

(((uint8_t *)previous_free + allocator.aligned_header_size) +

previous_free->size) == ((uint8_t *)block)) {

previous_free->size += block->size + allocator.aligned_header_size;

previous_free->next = block->next;

} else if (previous_free) {

previous_free->next = block; // insert block after previous free block

} else {

allocator.free_blocks = block; // there was no previous free block, so freed

// block is the new head of the free list

}

Should We Use Dynamic Memory Allocation in Embedded Systems?

In general, I believe that the answer is no, unless you really need it or you are forced to use it.

“Uncle Bob” has an interesting quote about dynamic vs static allocation:

Dynamic things are more flexible than static things, so when you want that flexibility, and you can afford it, go dynamic. If you can’t afford it, stay static.

This is an interesting quote that I believe to be true in general, but it requires some extra nuance when we are talking about embedded systems.

Regarding the flexibility given by dynamic memory allocation, we have seen that in most cases, it is a double-edged sword. The use of dynamic allocation can introduce many types of bugs in our code, such as memory leaks, use after free and double frees.

Even if we are careful enough to avoid bugs, dynamic memory allocation almost inevitably brings with it non-determinism due to the complex ways allocations and de-allocations can interact, possibly resulting in allocation failures that are unacceptable in some classes of devices.

That’s one of the reasons many software development guidelines such as MISRA C and the NASA Jet Propulsion Lab Coding Standard severely limit the use of dynamic allocations, or outright ban it.

Moreover, dynamic allocation also has an execution and memory overhead when compared with static memory. Allocation and deallocations take more time than accessing arrays, and any allocator implementation has a memory overhead, as we explained in the previous sections.

These are some of the costs of dynamic allocation that Uncle Bob says we have to be able to afford if we want to use it.

Taking Uncle Bob’s quote above, flipping it on its head and thinking from an embedded perspective, we could say the following:

Static things are more predictable and performant than dynamic things, so when you want that predictability and performance, and you can afford being less flexible, stay static. If you can’t afford it, go dynamic (with much care if you are working on safety-critical systems).

I don’t want to leave you with the impression that dynamic allocations should never be used in embedded systems. There are cases where they can be very useful, with little to no drawbacks.

Here are a couple of good ways to use dynamic memory allocation in embedded systems, and the situations where they might be useful.

- Allocating memory only at the start of the program and never de-allocating the memory

This can be useful if there is some configuration our device accesses every time it is started, and this configuration can change based on the model of the device or external conditions.

Of course, as we pointed out earlier, the memory used in dynamic allocation is just memory and is limited, so we could just pre-allocate statically the maximum possible amount of memory reserved for dynamic allocation and use only what is required by the configuration. But if there are different types of data that need to be allocated, using dynamic allocations will make our code cleaner.

We just have to make sure that the configuration won’t require more memory than what we have available, since that would cause the allocation to fail.

- Allocating memory at the start of the program to do some initial setup, then de-allocating it, and allocating it again for other stuff before executing the program tasks

This is kind of a special version of the previous situation, but it in this case dynamic memory allocation brings the extra benefit of allowing us to re-use memory, helping with memory-constrained devices.

This situation won’t apply to most applications, but if you have a device that has some initial setup stage that requires a lot of memory that won’t be used again after the setup, dynamic allocation can be your only resort.

All in all, my advice is still to avoid dynamic memory allocation in embedded systems. But if you understand the risks associated with it, how it works, how it can fail and how it can be beneficial and are also very aware of the requirements (business and legal) of the device you are working on, you are more than able to make your own choices on the matter.